Metacognition is all you need?

Using Introspection in Generative Agents to Improve Goal-directed Behavior

Jason Toy,

Josh MacAdam,

Phil Tabor

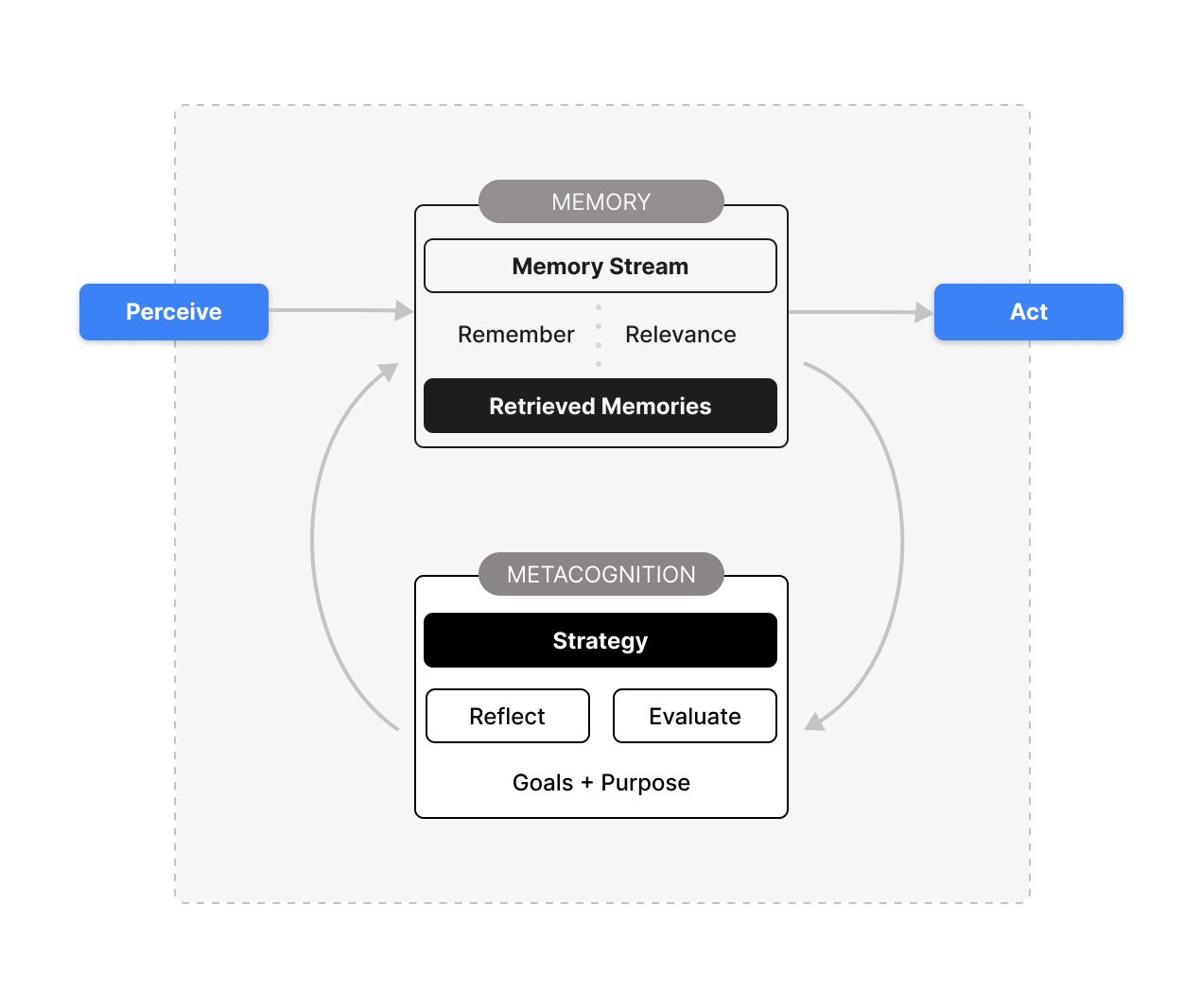

Recent advances in Large Language Models (LLMs) have shown impressive capabilities in various applications, yet LLMs face challenges such as limited context windows and difficulties in generalization. In this paper, we introduce a metacognition module for generative agents, enabling them to observe their own thought processes and actions. This metacognitive approach, designed to emulate System 1 and System 2 cognitive processes, allows agents to significantly enhance their performance by modifying their strategy. We tested the metacognition module on a variety of scenarios, including a situation where generative agents must survive a zombie apocalypse, and observe that our system outperform others, while agents adapt and improve their strategies to complete tasks over time.

Recent advances in Large Language Models (LLMs) have shown impressive capabilities in various applications, yet LLMs face challenges such as limited context windows and difficulties in generalization. In this paper, we introduce a metacognition module for generative agents, enabling them to observe their own thought processes and actions. This metacognitive approach, designed to emulate System 1 and System 2 cognitive processes, allows agents to significantly enhance their performance by modifying their strategy. We tested the metacognition module on a variety of scenarios, including a situation where generative agents must survive a zombie apocalypse, and observe that our system outperform others, while agents adapt and improve their strategies to complete tasks over time.

Overview

- LLMs are increasingly being used for agents with perpetual memories and chats

- Limited context lengths make LLM systems challenging to build

- An agent with a goal can easily get stuck on onforseen and unprogrammed situations

- An agent's strategy to achieve a goal must adapt to the situation over time, our framework allows for that

- A Metacognition module on top of an LLM allows goal oriented system to improve performance and adapt dynamically over time.

- Agents have a virtual memory external to an LLM that can grow beyond typical context lengths

- LLMs can be used to create dynamic interactive simulations with human-like belieavable agents

See Simulations

Citation

@article{toy2024metacognition,

title={Metacognition is all you need? Using Introspection in Generative Agents to Improve Goal-directed Behavior},

author={Jason Toy and Josh MacAdam and Phil Tabor},

year={2024},

journal={arXiv preprint arXiv:2401.10910}

}

Learn more about us